Those of you of a certain age may recall Harry Secombe warbling a marvellous ditty that began, ‘If I ruled the world…” Charming stuff. But we’re in a different world now, one that some believe is on the verge of being ruled by AI. So-called generative AI like ChatGPT has taken the internet by storm recently. We set out to explore what’s really happening, and how might it affect your business.

Gilmar: With me today are Ezri Carlebach and Lindsay Cast. Ezri, an Associate Partner at GW+Co, is a self-confessed science fiction fanatic and is currently developing an online course on all things SF. It’s right up there with his interest in anthropology and occasional double-bass playing. Lindsay is our Head of Design. He likes to keep things neat and simple, and has recently been fascinated by, and deeply impressed with, his experience of various AI platforms. Ezri, you suggested the title for this conversation, which comes from a 1963 pop song. Why?

Ezri: Well, the opening line of the song is, “If I ruled the world, every day would be the first day of spring”. And that encapsulates the con trick of ChatGPT and other systems like it. If you were to ask ChatGPT what would happen if AI ruled the world I bet it would say ‘every day will be the first day of spring’… which is nonsense. It’s poetic in the context of the song, but ChatGPT – and I’m jumping ahead here – is a con that is producing not just unhelpful stuff, but some potentially seriously damaging stuff.

If I ruled the world, everyday would be the first day of Spring. Visualised by Midjourney.

If I ruled the world, everyday would be the first day of Spring. Visualised by Midjourney.

Gilmar: That’s a strong assertion to kick off with. So, should we believe the hype?

Lindsay: I think we should frame this by saying what AI is, because it’s not a great name. These systems are certainly artificial, but they’re not necessarily intelligent. They can emulate intelligence, and if you haven’t seen the information they produce before, it comes across as extremely credible. There is a danger that we think of ChatGPT as an oracle that can tell us everything we need to know. However, if you take it for what it was originally intended, which is to replace online customer help services, then actually it’s very good.

Gilmar: So what is it then? Because what you’re outlining is something very different from what everyone has been talking about.

Ezri: There are two questions. First, the question of specifically what it’s made of, and as Lindsay was saying it’s a search engine with a slightly different delivery of its results. But the nature of that delivery is what makes it so worrying, because it is essentially a con. The definition of a con is something that gives people confidence, usually while taking their money. By the way, Microsoft are about to launch a subscription service to a ChatGPT-enabled version of their Bing search tool, so it probably isn’t going to remain free much longer.

But it also gives people confidence in what it’s saying because it sounds so plausible. However, all the analyses I’ve looked at show significant levels both of inaccuracy and of bias towards the usual suspects – white, able-bodied, heterosexual men. A company called Textio conducted a detailed assessment of how ChatGPT performs on certain HR tasks, like writing job descriptions, approaching potential candidates and writing feedback for employees. In a large majority of cases, it displays bias against Black people, against women, people with disabilities, and so on. ChatGPT is trawling the internet and not always finding good stuff, and you don’t necessarily know that because of the level of confidence it gives you. These chat bots, or natural language modelling AIs, are in their early days. Anything they say should be taken with a large pinch of salt.

Lindsay: You’re right, in that it will only ever be as good as the data that goes into it. But it’s just like the internet in that regard. As long as people don’t treat it as the truth, but instead as a user-friendly tool that’s trying to present information that it thinks is correct in a nice, digestible way, then I think there’s still value in it.

Ezri: I worry about that phrase, ‘it thinks is correct’. It doesn’t think, it’s just churning stuff out without any intelligence or consciousness. The ‘caveat emptor’ approach, where it’s up to users to beware, is risky because there’s so much hype people are easily led to believe it’s infallible. That’s why you get people thinking it’s the Oracle of Delphi and asking it what’s going to happen in the future, what stocks to invest in, things that could actually cause them significant trouble.

AI, if it were an oracle that could tell people the future. Visualised by Midjourney.

AI, if it were an oracle that could tell people the future. Visualised by Midjourney.

Lindsay: I think that speaks to the problem you’re trying to solve with these things. Seeing it as the Oracle of Delphi, trying to get it to tell the future or recommend stocks, is a complete misuse of the tool. However, there must be a degree to which you would feel comfortable with an interface like ChatGPT as a tool to collate basic information and consolidate it nicely for you. To me, it’s just an advanced Google search.

Gilmar: Is it the case that these systems will become more sophisticated, and these concerns are just teething problems? Or are we saying AI can’t be trusted sufficiently to not have to check whether its output is correct? At the moment, it’s easier to ask ChatGPT than Google, but with Google I can see where search results come from so I can verify whether they at least look credible. Can that be overcome?

Ezri: The more sophisticated it becomes, the harder it will be to evaluate what it’s doing. I think it’s extremely useful in some contexts, but right now we’re in a Barnum and Bailey scenario. It’s all a circus, and some people will be left broke and angry as a result. That doesn’t strike me as a good thing, either for the people who use it or for AI, because undermines trust in the AI industry.

Lindsay: I’d say it’s more than teething problems. These are problems this sort of system is bound to face. I’m hoping that all the talk around it will lead to more regulation. Maybe I’m being optimistic here, but I think it’s about credibility. I could see a world where being able to say, “okay, we’ve built this system, this is where we got the information” makes that system more focused and more targeted. You could keep the easy interface, but you’d have the right tool for the right job. We all want the ability to get somewhere fast, and ChatGPT gets there with 90% accuracy, maybe less, but it does get there quickly. That first layer of research, the information you would find for yourself now, Chat GPT does in seconds. That’s one of its main attractions.

Ezri: It’s still a double-edged sword. Using it for chatbots on retail websites is brilliant, because waiting to speak to a human agent is expensive and can cause a lot of delay. If a chatbot can answer customer questions in a flash, that’s definitely an advantage. Businesses are always looking for ways to do things faster or cheaper, or both. But the problem is it’s being presented as a window on all human knowledge, or at least all the knowledge on the internet. With Microsoft already offering a premium subscription service using ChatGPT, it begins to look like the ultimate confidence trick. That’s not my phrase, by the way, it comes from media commentator Ted Gioia.

There are really good uses for AI generally, and in business in particular. I think the single most important thing that businesses can do is focus on AI literacy, supporting their people to deal with the consequences of this kind of technology. A recent project I was involved in, looking at adoption of AI tools in UK law and accountancy firms, found all sorts of unintended or unexpected consequences, for example in professional training pathways, client relationships, business model innovation, and so on. Those are areas where the rubber hits the road, with real-world implications, and often the impact is not the obvious “it does everything faster”.

Gilmar: We’ve touched on the future of jobs here. Let me ask slightly provocatively: Are we all going to be out of a job in 10 years?

Lindsay: Some people will be, but there’ll be more jobs to replace the ones that disappear. The people that seem to be most worried are content writers, and people who work in call centres. Also, while they have slightly less reason to worry, people like lawyers and architects, professional services providers where clients might think what they do could be automated and done in a flash by AI. Looking forward more positively, if we harness it right we’ll do the easy stuff much more quickly. Then we can do the harder stuff ourselves and spend more time on it. I think some of the failed forays into getting AI to write content prove there’s still a long way to go before you can remove the human touch altogether. And you can’t use AI to create something new, it can only do what it’s programmed to do. It can’t do the thinking for you.

A robot in a job interview. Visualised by Midjourney.

A robot in a job interview. Visualised by Midjourney.

Gilmar: That seems like a big point – it can only rehash. There’s been a debate along those lines in the visual design community. I felt the more interesting contributors were saying it’s a wake-up call for those who maybe don’t use their imagination and creativity enough and rely on vanilla designs, or surfing for ideas. Maybe something like DALL-E, the AI image generator, or ChatGPT, can make us value our own imagination more. Let’s talk about other potential opportunities.

Lindsay: Perhaps the way I’ve been using these tools could be a model for how businesses might use them. If they’ve got to make creative decisions they could use AI to see what’s already been done. For example, if we’re brainstorming for a creative idea we could ask an AI tool, “show me everything been done on this subject”. Then we’d ensure what we do is nothing like that. There are similar use cases, where you find everything that’s been published on a subject, and then say, “well, here’s an opposing view”. Again, it comes down to the quality of the data source, but I think the chatGPT type of interface gives people a chance to do these things very quickly.

Ezri: There are two things I would argue for. First, grow AI literacy throughout your business, because it will affect every business at some point and in unpredictable ways. At the same time, focus on supporting skills that can’t be replaced by AI, creative skills and relationship skills and caring skills, because they will be valuable in your business anyway. It’s like debossing or embossing. Is AI just happening to you, is it debossing its imprint on your business? Or are you pushing out, embossing your business with the ideals and capabilities you actually want?

Gilmar: I love the printing analogy!

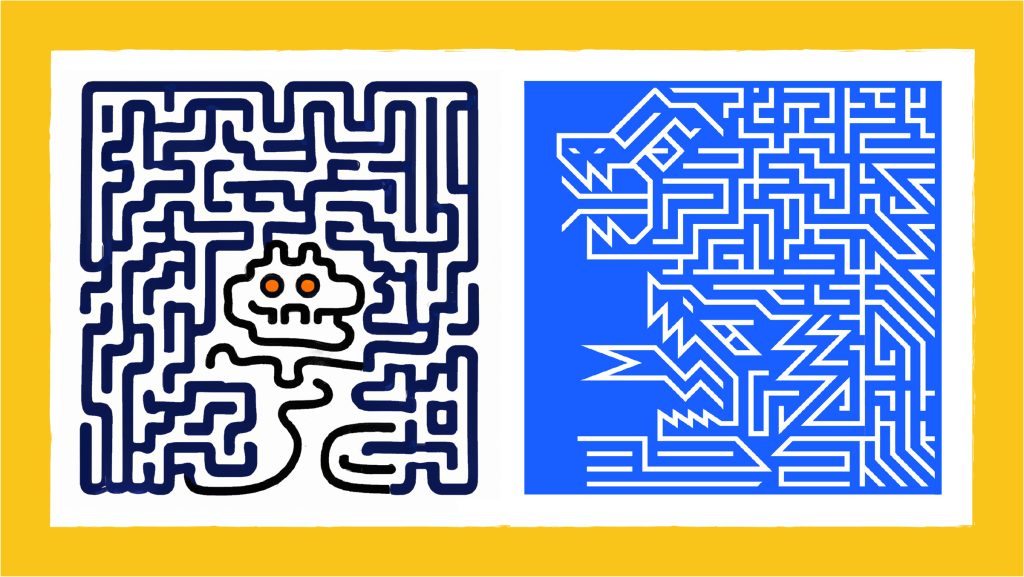

Lindsay: A good example is the Identec Solutions story. That creative concept came about from a conversation Gilmar had with their CEO, who mentioned Toyota’s Lean production techniques, specifically Muda, the notion of waste being the productivity killer. That was a very emotive idea to land on, something that AI would never have landed on. We developed the idea of Muda as the monster in the maze that kills productivity, and built an entire brand identity around it.

DALL-E’s first take on ‘Monster shapes formed out of a maze’ (left) next to the GW+Co design team’s solution.

DALL-E’s first take on ‘Monster shapes formed out of a maze’ (left) next to the GW+Co design team’s solution.

Ezri: Also, we facilitate collaborative design solutions as part of the core GW+Co proposition, and there’s no way AI is going to get a group of humans to collaborate better. Not for decades, if not centuries, not until we get hyper-realistic androids like Data from Star Trek: The Next Generation, walking into a room and facilitating a workshop. Until then, it will remain a uniquely human capability, and a particularly strong GW+Co capability!

Lindsay: So we agree, AI is a set of tools that businesses need to understand better if they’re going to use them to do what they do better. Our advice seems to be to treat them as such. Don’t think the tool is going to replace you – and don’t look to it to replace your people. Instead, look to it to do some of the easy stuff quicker, so your people can do what they do, better.

Gilmar: Thank you both very much for a fascinating conversation. I guess it’s all about helping people to understand what AI is and what it can do, and in such a way that everyone, including those with fears – justified or not – can participate.

See you next time!

What’s next?

Want to know more about GW+Co? Have a chat with Olivia

Have a question? Ask a question of one of the authors – Ezri or Lindsay

Have a strategic problem? Walk with Gilmar